I’m having a problem getting a node.js app to run on Openshift.

I’ve set up my openshift project to pull from a git repo.

My server.listen call is being made like this:

var port = process.env.OPENSHIFT_NODEJS_PORT || process.env.PORT || 8080;

var ip = process.env.OPENSHIFT_NODEJS_IP || process.env.IP || '127.0.0.1';

var server = http.createServer(myServerFunction);

server.listen(port, ip);

The pull and build work fine. I can see in the pod terminal that my app is running because there is some console.log output indicating this. I can also see that neither process.env.OPENSHIFT_NODEJS_IP nor process.env.IP exist, and so the port has defaulted to 8080.

If I go into the pod’s terminal and execute curl http://localhost:8080 I see precisely the html output I would expect from my app.

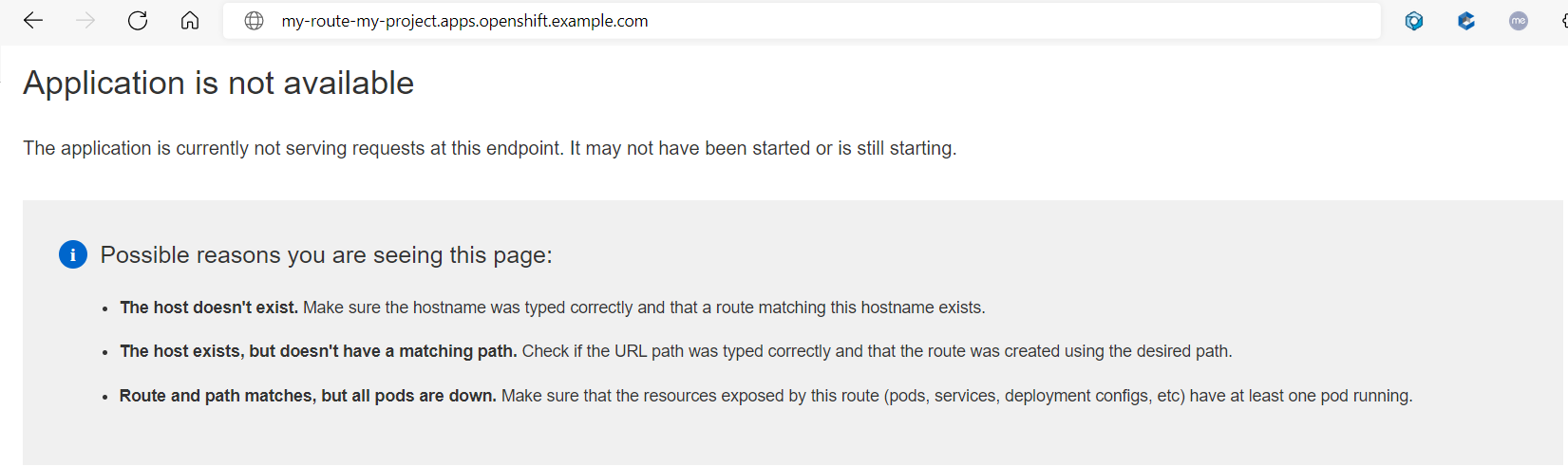

However, when I click on the overview tab and find the external url for my app (which is of the format http://<application>-<domain>.1d35.starter-us-east-1.openshiftapps.com), clicking that link results in openshift’s «Application is not available» error page.

It seems to me that my node app is running perfectly well, but is not connected to the external url. I suspect that 8080 is not the correct port, and that I am required to do more configuration in order for my nodejs app to receive a value at process.env.OPENSHIFT_NODEJS_PORT.

In other stackoverflow answers I’ve also seen 0.0.0.0 as the default when process.env.OPENSHIFT_NODEJS_IP is not set. Could this make a difference?

Overall, how can I make the external url link to my nodejs server?

Thanks for any help!

Application worked fine, but all of the sudden it stopped working.

After successful deployment of the application, there is no access to it in a browser.

«Application is not available» appears all the time. Logs look just fine.

Version

oc v3.6.173.0.7

kubernetes v1.6.1+5115d708d7

features: Basic-Auth

Server https://api.starter-ca-central-1.openshift.com:443

openshift v3.7.0-0.143.3

kubernetes v1.7.0+80709908fd

Steps To Reproduce

- Deploy your app successfully.

- Go to app side in a web browser.

Current Result

«Application is not available» page.

Expected Result

App home page.

Additional Information

Python2.7 Django app.

Tip 1:

Maybe, this is irrelevant, but I have a Warning bar: «An error occurred getting metrics. Open Metrics URL | Don’t Show Me Again» in Overview panel. Clicking «Open Metrics URL» leads me to «Application is not available» page.

Tip 2:

Just to try figure it out by myself, I created route for by database. After some time, it was still with tooltip icon: «The route is not accepting traffic yet…»

Edit:

Tip 3:

Using remote shell to login into the app pod (oc rsh)

command: curl localhost:8080 works just fine.

Edit2:

Fix some typo in the text.

Let’s say you have deployed an application to OpenShift, and when requesting the application, Application is not available is being returned.

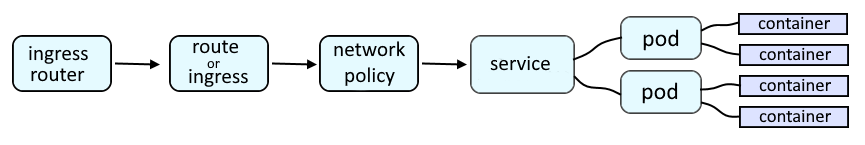

An OpenShift route or an Ingress route will provide a URL such as http://my-route-my-project.apps.openshift.example.com which is used to route a request onto a service, which is then routed onto a pod, and then to the container in the pod, and finally to the application running in the container.

PODS

The oc get pods command can be used to determine if the pod is Running.

~]# oc get pods

NAME READY STATUS RESTARTS AGE

my-pod-9mzm2 1/1 Running 0 8d

my-pod-zm5g6 1/1 Running 0 8dUse the oc describe pod command to determine the ports the pod is listening for connections on.

~]$ oc describe pod my-pod-zm5g6

Containers:

my-app:

Ports: 8080/TCPIf there are two (or more pods) in the namespace, the oc exec and curl command can be used to determine if one of the other pods is able to get a response from the pod with that is returning connection refused. In this example, the connection is successful, returning HTTP response code 200 OK.

~]$ oc exec pod/my-pod-9mzm2 -- curl --silent --insecure my-pod-zm5g6:8080 -v

* About to connect() to my-pod-zm5g6 port 8080 (#0)

* Trying my-pod-zm5g6...

* Connected to my-pod-zm5g6 (10.131.4.136) port 8080 (#0)

> Host: my-pod-zm5g6:8080

< HTTP/1.1 200 OK SERVICE

The oc get services command can be used to determine if a service has been created for the pod that is not available. If not, the oc expose pod command can be used to create the service.

~]$ oc get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-service ClusterIP 172.30.166.121 <none> 8080/TCP 3m24sThe oc describe service command can be used to show details about the service. Notice in this example that the service is used to forward requests onto my-pod-zm5g6 on port 8080.

~]$ oc describe service my-service

Name: my-service

Namespace: my-project

Labels: app=my-pod-zm5g6

Annotations: <none>

Selector: deployment=my-pod-zm5g6

Type: LoadBalancer

IP: 172.30.166.121

Port: 8080-tcp 8080/TCP

TargetPort: 8080/TCP

Endpoints: 10.131.5.3:8080

Session Affinity: None

Events: <none>If there are two (or more pods) in the namespace, the oc exec and curl command can be used to determine if you are able to get a response from the pod with that is not available using the service. In this example, the connection is successful, returning HTTP response code 200 OK.

~]# oc exec pod/my-pod-vmzmz -- curl my-service:8080 -v

* About to connect() to my-service port 8080 (#0)

* Trying 172.30.166.121...

* Connected to my-service (172.30.166.121) port 8080 (#0)

> Host: my-service:8080

< HTTP/1.1 200ROUTE

The oc get routes command can be used to list the routes. If not, the oc expose service command can be used to create the route.

~]# oc get routes

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

my-route my-route-my-project.apps.openshift.example.com my-service 8080 reencrypt/Redirect NoneThe curl command can be used to see if you are able to connect using the route.

~]$ curl my-route-my-project.apps.openshift.example.com -v

* TCP_NODELAY set

* connect to 10.84.188.21 failed: Connection refused

* Failed to connect to my-route-my-project.apps.openshift.example.com: Connection refused

* Closing connection 0

curl: (7) Failed to connect to my-route-my-project.apps.openshift.example.com: Connection refused

command terminated with exit code 7The oc describe route command can be used to display more details about the route. The route should be exposed on a router. In this example, the route is exposed on the default router.

~]$ oc describe route my-route

Name: my-route

Namespace: my-project

Created: 17 minutes ago

Labels: name=my-route

Annotations: openshift.io/host.generated=true

Requested Host: my-route-my-project.apps.openshift.example.com

exposed on router default (host router-default.apps.openshift.example.com) 17 minutes ago

Path: /example

TLS Termination: <none>

Insecure Policy: <none>

Endpoint Port: 8080

Service: my-service

Weight: 100 (100%)

Endpoints: 10.217.0.68:8080The default router pod should be in the openshift-ingress project.

~]$ oc get pod --namespace openshift-ingress

NAME READY STATUS RESTARTS AGE

router-default-f6d44996c-sljgl 1/1 Running 2 (58m ago) 56dThe router pods run an haproxy (high availability proxy) load balancer. The oc exec command can be used to determine if the pod/service have been exposed on haproxy.

~]$ oc exec pod/router-default-76c5c89559-dclkw --namespace openshift-ingress -- cat /var/lib/haproxy/conf/haproxy.config | grep my-route

server pod:my-pod:my-service:8080-tcp:10.129.7.69:8080 10.129.7.69:8080 cookie 15b9071e86b87a2b4b6f976291de96cf weight 256 check inter 5000msNETWORK POLICIES

The oc get networkpolicies command can then be used to list the network policies that have been created that may be denying incoming (ingress) requests coming in:

- from other projects / namespaces

- that do not match a pods label

- from an IP address outside of an IP subnet

~]$ oc get networkpolicies

NAME POD-SELECTOR AGE

allow-ingress-region-east region=east 41s

Andreas

unread,

May 23, 2017, 2:39:39 PM5/23/17

to OpenShift

While slowly clickinng the deployed v3 applications pages I often get

Application is not available

The application is currently not serving requests at this endpoint. It may not have been started or is still starting.

and it seems that there is not specific reason for that, because the docs says that application go into sleep-mode after 30 min of unactivity.

So I feel that v3 is almost unusable at the stage it is now. The best thing that v2 has was gone and the speed is also slowly than v2 so it is a kind of disappointing after using v3.

Graham Dumpleton

unread,

May 23, 2017, 5:34:48 PM5/23/17

to open…@googlegroups.com

Do:

oc get pods —watch

One reason would be that your application pod keeps crashing.

So check to see if the pod keeps being replaced.

Graham

Andreas

unread,

May 23, 2017, 5:49:31 PM5/23/17

to OpenShift

this is what i get

C:UsersUser>oc get pods —watch

NAME READY STATUS RESTARTS AGE

easy-1-87mfs 1/1 Running 0 3h

easy-1-build 0/1 Completed 0 3h

easy-1-pkfbk 1/1 Running 0 3h

Graham Dumpleton

unread,

May 23, 2017, 5:55:25 PM5/23/17

to open…@googlegroups.com

So they aren’t churning if that stays the same like that.

Have you checked logs for both containers, as well as the events generated by OpenShift about the application to see if there is anything out of the ordinary?

Have you got into the containers from the web console or using oc rsh and used a tool like curl inside of the container to make requests against the container to see if they always work?

Are you implementing any readiness check?

Graham

Andreas

unread,

May 23, 2017, 6:25:39 PM5/23/17

to OpenShift

What do you mean by ‘churning ‘, — crushing?

Here is how it was before:

We see a warning here, that quota was reached, but it is still was in alowed ‘edges’, ya?

When I was scrolling throw the logs I was not able to find any unexpected errors or like that.

Then I decreased pods to 1 pod and seems that issue was gone… Here is how it is now:

but now it uses 512 MiB instead of 1 GiB of memory, is not it?

So is it was a scaling as the reason of that issue?

Graham Dumpleton

unread,

May 23, 2017, 6:44:40 PM5/23/17

to open…@googlegroups.com

When I say churning, I mean the application is crashing and the pod being replaced with a new one by OpenShift, with a different name.

A concern was, given that screen shots you gave don’t show you have health checks, was that one of the instances hadn’t started up correctly and was not actually serving requests.

Now if you are using a web browser where cookies are used, requests would, due to stick sessions implemented by the routing layer, normally get routed to the same instance so if you got a good one, would always show good. If got the bad one, always bad. If however you were using a command line client such as curl, it will route requests to either, meaning that if one isn’t working, the occasional request would fail. If when you scaled down, it actually shutdown the bad one, then all requests would appear to work.

As a next step, I would suggest you implement both a readiness and liveness probe as these will help to detect when your application isn’t serving requests, or constantly failing requests.

The readiness probe controls whether the pod IP is included in the list of endpoints where traffic can be sent. The liveness probe detects application failures and can trigger a restart of the failing pod.

Use a HTTP liveness check against a URL endpoint of the application which indicates whether the application is running okay or not for both.

Graham

Andreas

unread,

May 23, 2017, 6:45:50 PM5/23/17

to OpenShift

Graham,

after I was triyd to add a liviness check I was immediatlely started getting of the initial topic errors,

and here is how the logs were

—> Scaling up easy-2 from 0 to 1, scaling down easy-1 from 1 to 0 (keep 1 pods available, don’t exceed 2 pods)

Scaling easy-2 up to 1

Scaling easy-1 down to 0

—> Success

So why it did not exceed 2 pods?

Andreas

unread,

May 23, 2017, 6:49:35 PM5/23/17

to OpenShift

and this is how it is now after adding a liviness check

Graham Dumpleton

unread,

May 23, 2017, 6:53:50 PM5/23/17

to open…@googlegroups.com

If you set replicas to more than 2, you wouldn’t be able to go beyond more than 2 pods because the overall memory requirement for 2 pods is already at the memory limit for the account. The scheduler would know this and would constantly show has trying to scale to final number, but would never actually be able to.

Graham Dumpleton

unread,

May 23, 2017, 6:55:35 PM5/23/17

to open…@googlegroups.com

Which indicates that responses may not be coming back quick enough and so health checks are thinking the application is failing.

What is the application implemented in? How many concurrent requests can it handle? Is the URL you used for the health checks something simple that will actually respond immediately?

and this is how it is now after adding a liviness check

Andreas

unread,

May 23, 2017, 7:47:14 PM5/23/17

to OpenShift

This is a Java Server Faces aplication.

I do not know how many concurrent requests can it handle — I thought it depends of container i.e. openshift platform?

For the health checks I used an application tutorial page which is static as I think…

But after I removed a readiness probe I can not find where to add it again

Andreas

unread,

May 23, 2017, 8:10:39 PM5/23/17

to OpenShift

I found where to change livines and readines probe, now the question is it allowed for them to reffer the same page?

And is it not too long 10 sec for the waite?

And what is initial delay — for what purpose does it present?

Graham Dumpleton

unread,

May 25, 2017, 4:20:43 AM5/25/17

to open…@googlegroups.com

> On 24 May 2017, at 3:10 AM, Andreas <cinem…@gmail.com> wrote:

>

> I found where to change livines and readines probe, now the question is it allowed for them to reffer the same page?

Using the same URL is fine.

> And is it not too long 10 sec for the waite?

OpenShift will not care what you set timeoutSeconds to. You need to just insure it is larger than how long that URL would normally take. Make sure you take into consideration time for talking to back end services if the probe URLs talk to database etc so as to check that whole system is working and not just that web server is handling requests.

> And what is initial delay — for what purpose does it present?

That is to avoid false negatives when your application pod is first starting up and not yet handling requests.

Graham

У меня запущено приложение в стартере Openshift Online, которое работало последние 5 месяцев. Единственный модуль за службой с определенным маршрутом, который выполняет граничное завершение tls.

С субботы при попытке доступа к приложению выдает сообщение об ошибке

Application is not available

The application is currently not serving requests at this endpoint. It may not have been started or is still starting.Possible reasons you are seeing this page:

The host doesn’t exist. Make sure the hostname was typed correctly and that a route matching this hostname exists.

The host exists, but doesn’t have a matching path. Check if the URL path was typed correctly and that the route was created using the desired path.

Route and path matches, but all pods are down. Make sure that the resources exposed by this route (pods, services, deployment configs, etc) have at least one pod running.

Модуль запущен, я могу выполнить его и проверить это, я могу перенаправить на него порт и получить к нему доступ.

проверка различных компонентов с помощью oc:

$ oc get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE

taboo3-23-jt8l8 1/1 Running 0 1h 10.128.37.90 ip-172-31-30-113.ca-central-1.compute.internal

$ oc get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

taboo3 172.30.238.44 <none> 8080/TCP 151d

$ oc describe svc taboo3

Name: taboo3

Namespace: sothawo

Labels: app=taboo3

Annotations: openshift.io/generated-by=OpenShiftWebConsole

Selector: deploymentconfig=taboo3

Type: ClusterIP

IP: 172.30.238.44

Port: 8080-tcp 8080/TCP

Endpoints: 10.128.37.90:8080

Session Affinity: None

Events: <none>

$ oc get route

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

taboo3 taboo3-sothawo.193b.starter-ca-central-1.openshiftapps.com taboo3 8080-tcp edge/Redirect None

Я также пытался добавить новый маршрут (с tls или без него), но получаю ту же ошибку.

Кто-нибудь знает, что может быть причиной этого и как это исправить?

Дополнение 17 апреля 2018 г.: Получил письмо от службы поддержки Openshift Online:

It looks like you may be affected by this bug.

Так что жду решения.